Hi,

The X axis spans from 0 to mapper.getWidth().

The Y axis spans from mapper.getHeight() (for the first point of the centerline) to 0 (for the last point of the centerline).

The image is in the Z=0 plane.

So for each point the centerline of index pointIndex going from 0 to centerline.getNumberOfPoints():

- Z will be

0 - Y can be computed using

mapper.getHeight() - centerline.getDistancesToFirstPoint()[pointIndex] - X will be

mapper.getWidth()ifmapper.getCenterPoint()is null or it will bedot(centerPoint - centerlinePoint, localXDirection)withlocalXDirectionbeing the local x axis for this point, which is computed either using the uniform orientation ormapper.getOrientationDataArray()

The result will be the point coordinates for the mapper, but the actor (the ImageSlice actor) can have a matrix too.

Hello, thank you, but how do I calculate the localXDirection, which I never got right

To compute the localXDirection, you will need the local orientation at that point of the centerline.

This orientation is in fact a quaternion that you can get in two different ways depending on where you are on the centerline:

- if you are on a point of the centerline, for example at index

centerlineIndexin the centerline, then your orientation will bemapper.getOrientedCenterline().getOrientations()?.[centerlineIndex]. If there is no orientation, you can consider that the quaternion is the unit quaternion. - if you need to interpolate, you can use

mapper.getCenterlinePositionAndOrientation()with the right distance argument. You can use the arraymapper.getOrientedCenterline().findPointIdAtDistanceFromFirstPoint()to know the distance of each point from the beginning of the centerline.

When you have the quaternion, you can simply rotate the vector [1, 0, 0] using this quaternion.

You can use the vec3 module of the gl-matrix library for this:

const localXDirection = vec3.create();

vec3.transformQuat(localXDirection, [1, 0, 0], q);

ok, thank you very much. May I ask you another question, how to recalculate the point coordinates of this center point in the cpr plane when rotating the Angle along the center line in stretch mode

Oh yes, I forgot the tangent direction:

Instead of rotating [1, 0, 0] by the quaternion, you have to rotate mapper.getTangentDirection()

To make sure that your calculations are right, you can visualize the centerline using the CPR mapper by adding this code in Sources\Rendering\OpenGL\ImageCPRMapper\index.js at line 806:

tcoordFSImpl.push(

'if (abs(horizontalOffset) < 1.0)',

'{',

' gl_FragData[0] = vec4(1.0, 0.0, 0.0, 1.0);',

' return;',

'}'

);

Does the actor have any transform? If that’s the case, you should apply its transform to your line.

The transform of an actor is a mat4 that you can get using actor.getUserMatrix().

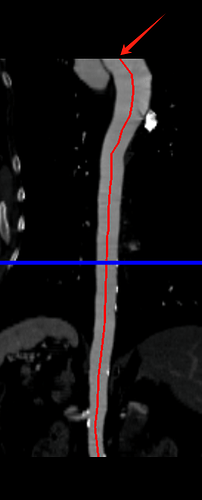

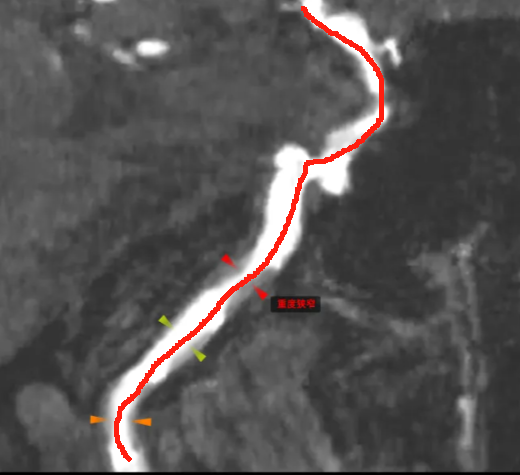

You are very close to something that works ![]()

Your Y axis seems flipped, and you have an offset on the X axis, but I don’t recommend changing the computations of newX and newY because this is how they are computed internally. If you are using the example for the ImageResliceMapper, the actor has a transform that is set at lines 210 and 310: actor.setUserMatrix(...).

So if you want the coordinates in canvas coordinates, you will have to compute the points in world coordinates first.

The coordinates that you computed before applying the user matrix are in view coordinates.

So you will have to apply the user matrix.

I advise using the exisiting vec3.transformMat4(). If it doesn’t give you the right points, you may have to transpose the matrix first by using mat4.transpose() function.

Then you can get the canvas coordinates from world coordinates using computeWorldToDisplay() function.

Thank you very much for your careful answer. By the way, in the process of debugging, I found that when the center line is rotated, the x coordinate of the first point has been changing, not rotating around itself, but the x value of the first coordinate of the reference center line remains unchanged no matter how it is rotated

Yes, this is the expected behavior, as the centerPoint is set to the first point of the centerline if not set manually in useStretchedMode() method.

You can set a different center point, for example the middle point or the last point of the centerline by looking at how it is done in useStretchedMode().

Yes, but when I calculate that the points are connected to the line, the rotation Angle is changing, not according to this center point set the point fixed center line, is my coordinates not correctly converted? I have converted the coordinates to display coordinates, but the x coordinates obtained still exceeds the width of my canvas. How can I correctly convert the coordinates to my custom canvas coordinates? I have tried many times and it is still wrong, which makes me very upset. You’ve been very helpful, thanks!

In which coordinate system do you want to get the centerline positions?

Can you share the code that you use for that?

Now I draw another svg of the same size on the canvas of cpr. I need to extract the center line coordinates of the cpr plane of this image and draw a triangle mark on the center line of svg. With the mouse dragging and rotating, the canvas of cpr and the center line of svg need to transform and interact. And the two positions are not misaligned

const getOrientationAtPoint = (index) => {

const orientations = mapper.getOrientedCenterline().getOrientations()

if (orientations && orientations[index]) {

return orientations[index]

}

return quat.create()

}

const computeLocalXDirection = (index) => {

const quat = getOrientationAtPoint(index)

const localXDirection = vec3.create()

let tangentDirection = mapper.getTangentDirection()

return vec3.transformQuat(localXDirection, tangentDirection, quat)

}

const _centerline = mapper.getOrientedCenterline()

let points = []

let centerPoint = mapper.getCenterPoint()

let distancesToFirstPoint = _centerline.getDistancesToFirstPoint()

let pointsArr = _centerline.getPoints().getData()

for (let pointIndex = 0; pointIndex < pointsArr.length / 3; pointIndex++) {

const point = pointsArr.slice(pointIndex * 3, pointIndex * 3 + 3)

const newY = height - distancesToFirstPoint[pointIndex]

let newX = 0

if (centerPoint) {

const localXDirection = computeLocalXDirection(pointIndex)

newX = vec3.dot(vec3.subtract([], centerPoint, point), localXDirection)

} else {

newX = width

}

const newZ = 0

points.push([newX, newY, newZ])

}

const matrix = actor.getUserMatrix()

const worldCoord = vec3.create()

let _linepoint = []

points.map((point, index) => {

vec3.transformMat4(worldCoord, point, matrix)

this.params.worldCoords.push(worldCoord)

const coordinate = vtkCoordinate.newInstance()

coordinate.setCoordinateSystemToWorld()

coordinate.setValue(worldCoord[0], worldCoord[1], worldCoord[2])

const displayCoord = coordinate.getComputedDisplayValue(stretchRenderer)

_linepoint.push([displayCoord[0], displayCoord[1]])

})

console.log(_linepoint)

For the computation of X, I think that I made a mistake and that there is a constant offset of width / 2 (I guess that width = mapper.getWidth()). When there is a center point, it just adds an other offset. So you can try replacing this:

With this:

newX = width / 2

if (centerPoint) {

const localXDirection = computeLocalXDirection(pointIndex)

newX += vec3.dot(vec3.subtract([], centerPoint, point), localXDirection)

}

If you still have issues, you can transpose actor.getUserMatrix() as I don’t remember what the conventions are (column or row major) for this matrix.

Now that it’s drawn correctly,thanks again. You’re too kind!

Hi, Thibault Bruyere,

Can I ask you one more question, how do I get the cpr image data after straightening and then get the vertical cross section of the vessel every 2.5mm.Thanks a lot ![]()

Hello,

The ImageCPRMapper is just a mapper. It means that it takes some data as input (in this case an imageData) and displays what it is asked to display (in this case using an oriented polyline and some rendering settings).

So there is no intermediate straightened image data. 3D Slicer may have what you want, or you could create your own filter that deforms image data.

I see, thank you, I now is to be calculated according to the center line of point upwards or downwards 2.5 mm location of vascular centerline points and normal vector to the tangent plane, according to the (mapper.getCenterlineTangentDirections ()) of the tangent vector to obtain the normal vector doesn’t seem very accurate