Hello Everyone,

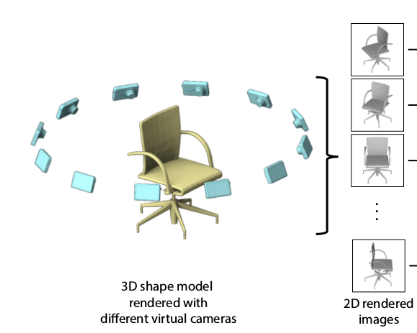

I’m currently working on generating 2D projection images of a 3D surface mesh from various camera positions and need to project back the 2D image information after processing, such as labeling the correct segmentation region on the 3D surface based the AI segmented images. While I’ve successfully tackled the first part, I’m encountering issues with the projection back process.

I’ve saved the camera view transform (GetViewTransformMatrix) for each snapshot during forward projection and used it to transform the polydata back to the same view for the back projection. In this process, I employed vtkHardwareSelector (Cell selection) to identify visible points for each view and vtkCoordinate to obtain view coordinates. I used this coordinate to find the corresponding image location and label point based on image pixel value.

However, the current method incorrectly labels regions outside the desired (correct) area. I would appreciate any suggestions on how to improve this process.