This relates to #5057 and #12000. Due to recent other needs that had be spelunking around inside VTK’s implementation of shadows, I thought I’d tackle this topic. However, I’ve hit a wall.

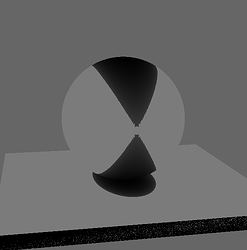

Starting with the Shadows example, I made the following simplifications:

- Simply use the sphere

- A single light: a directional light pointing straight down (increasing its intensity)

- Back the camera out so we can see things a bit better (more visual context).

So, we’ll render the image twice: once with a square render window and once with a window twice as wide as it is high.

When the render window gets wider, the shadow gets narrower. Although the directional light view frustum is defined to enclose the bounding box, its effect is such that its scope becomes narrower. So, I explored a number of hypotheses:

The in-light depth is incorrectly computed

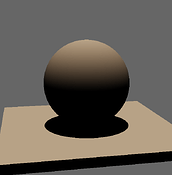

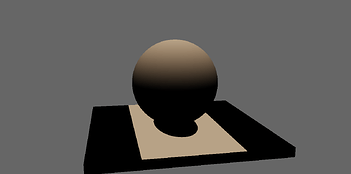

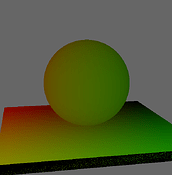

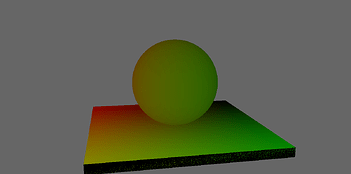

This would arise from the idea that the various transforms from geometry to world to light are somehow corrupted. We can test this by coloring the object with its normalized depth (in the light frame). So, distant fragments will be white, near fragments will be dark. Because the light is a directional light pointing straight down, we expect everything to be colored with a perfectly vertical gradient, light to dark.

It’s apparent that the transform matrices are not in any way corrupted by changing the render window size. They both exhibit the same gradient.

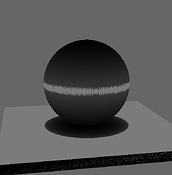

Bad texture coordinates

While the depth being computed is correct, perhaps the texture coordinates we’d use to look up into the shadow map is flawed?

Again, we can assess this by dumping the shadow map coordinates into the radiance red and green channels.

As the images show, the u- direction (the red channel) is mapped from right to left and the v-direction (green channel) is mapped back to forward). Given the intensities of red, green, yellow, and black, it is clear that the associated texture coordinate for each fragment is spanning the whole shadow map domain. So, we’re sampling at the right places.

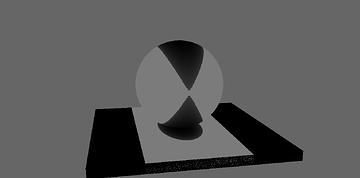

Shadow map has bad values

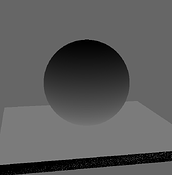

So, we know we’re transforming vertices into the shadow frame correctly (leading to the appropriate distance and texture coordinate values). Is there perhaps a problem with the map’s depth value? We can test this by simply applying the depth values in the shadow map as a texture.

In this case, we use the texture coordinates evaluated in the previous step and find the map’s depth value (by taking the log of the map value and dividing by depthC). This gets applied across all three channels.

Finally, we arrive at our first discrepancy. The square image is what we would expect:

- The top of the sphere is darkest.

- As we move down the sphere the sphere gets lighter (indicating increased distance to light).

- The equator has an artificially light band due to image sampling – at the very edge of the equator, whether we apply sphere distance or ground distance is purely a function of sampling precision. Where we see bright grey, we’d end up applying shadow to the sphere.

- As we proceed below the equator, all sphere fragments are occluded and bear the color of the point on the sphere reflected over the equator. (Again, directional light pointing straight down).

- Finally, the ground plane shows maximum distance except for the circular projection of the sphere on the ground plane. While it’s not apparent, the black circle on the ground plane is darker nearer the center of the disk.

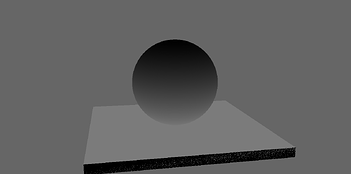

However, the image with a wider render window is clearly wrong. As with the shadow observed in the original image, the sampling is clearly reading from a “squished” texture. The v-direction is consistent, but the u-direction has apparently been scaled.

Furthermore, the region outside of the illuminated rectangular region is colored black here. The shadow map (in vtkShadowMapbakerpass) has been configured to be clamp to edge in all directions. So, if somehow the texture coordinate is being scaled outside of the domain [0, 1], I would expect that it would return values akin to the edge of the ground plane.

If I take the texture coordinates validated above and I apply the following transform (sorry for the poor formatting):

|2 0| |u| |-0.5| |u'|

| | * | | + | | = | |

|0 1| |v| | 0 | |v'|

I can create mostly the same effect in a square image. The shadow under the sphere is elliptical. However, the domain outside the single, centered shadow map is clamping to maximum distance (and not minimum distance as shown above).

This is where I’ve hit a wall. I’ve got two questions:

- How is it when the render window is scaled that the shadow map look up seems to get scaled to the same proportion?

- Why, when apparently sampling outside of the texture domain [0, 1]x[0, 1] am I getting minimum depth instead of maximum depth? (Assuming sampling outside of that domain is what is actually happening?)

For all of the poking around I’ve done in VTK’s code, I haven’t been able to see anything that looks like the obvious cause for this behavior.