Hi everyone,

From the Kitware VTK team we are excited to share some of our initial explorations into integrating Large Language Models (LLMs) with VTK. Our goal is to harness the power of LLMs to enhance the VTK user experience and streamline scientific visualization workflows. Here is a glimpse of what we have been working on:

Current prototypes

-

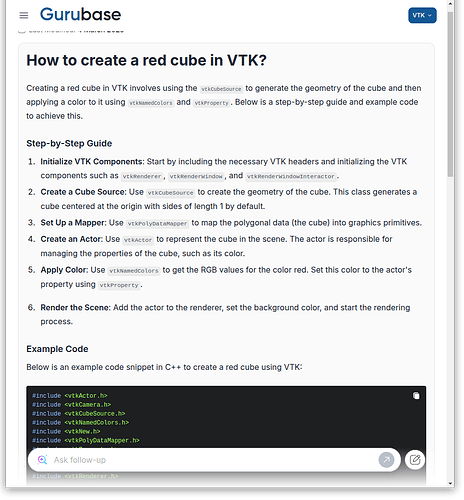

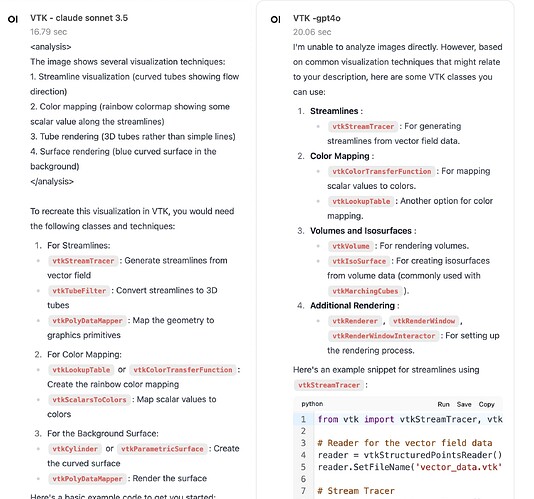

Interactive Q&A: Imagine having an AI assistant that can answer your VTK questions in real-time. From basic how-to to detailed explanations, this prototype aims to provide quick and accurate responses to user queries which could complement sites such as this discourse. As a proof of concept, we just launched a VTK assistant that anyone can use! It’s built on the open-source gurubase platform and gets its info from VTK docs and examples, so you can have more targeted chats and get better answers around VTK. Give it a shot and let us know what works and what doesn’t – as VTK developers, we might have some biases in how we use it, so your feedback is super important for making it better. FYI, the assistant logs chats and answers to help us improve future versions and build test cases for evaluating future prototypes.

-

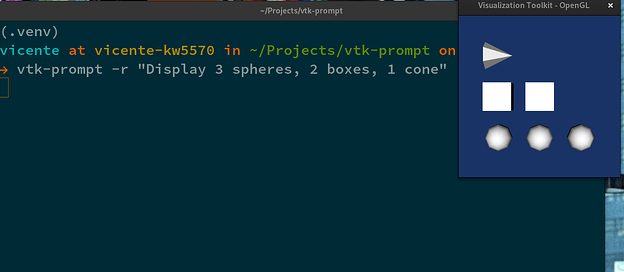

LLM VTK interpreter: Describe what you want to achieve in plain language, let the LLM generate the corresponding VTK code, and execute itself. This could significantly lower the barrier to entry for newcomers, add a whole new interface to interact with VTK, and boost productivity for experienced users.

-

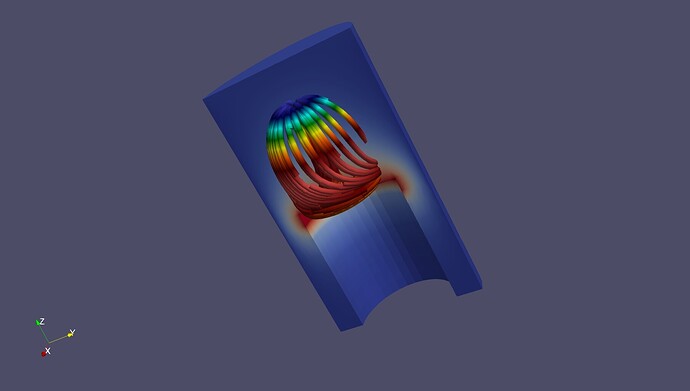

Sample File Generation: Quickly generate VTK sample files based on textual descriptions. This could be a valuable tool for testing, experimentation, and education.

We need your feedback

We believe that these prototypes have the potential to transform the way we interact with VTK, however, these are only a few instances that we came up with. We would like you to join us by asking you about new use cases and ideas on how LLMs could be leveraged within the VTK ecosystem.

Your feedback will play a crucial role in shaping the future of LLM integration in VTK. Let’s work together to push the boundaries of scientific visualization!

Please share your thoughts and ideas in the comments below.

We are looking forward to hearing from you! ![]()

Join us in the conversation

@will.schroeder @olearypatrick @Christos_Tsolakis @vbolea