Hi everyone,

I would like to propose some improvements to VTK both at C++ and Python level with the following goals:

- Convenient interface between the VTK data model and numpy, pandas etc. (wrapper changes -done-, python modules)

- More pythonic access to VTK objects through things like properties. (wrapper changes)

- A few convenience methods to make it easier to use VTK filters without hooking up a pipeline (what I call the imperative mode). (C++ changes mostly)

- A high level interface to rendering based on representations and views similar to that of ParaView (C++ changes)

I have a basic prototype that does some of 1-3 here. Note that I prototyped 2 and 3 in python even though much would be done at C++ / wrapper layer in the final implementation. I would love to hear feedback and ideas about all of these items and volunteers for the work would be great too :-). In this proposal, I would like to concentrate on item 1. I will create separate proposals for 2-4 in the future.

There is already a module that provides numpy friendly dataset classes:

from vtkmodules.numpy_interface import dataset_adapter as dsa

from vtkmodules.vtkImagingCore import vtkRTAnalyticSource

rt = vtkRTAnalyticSource()

rt.Update()

image = dsa.WrapDataObject(rt.GetOutput())

rtdata = image.PointData['RTData'] # rtdata is a numpy array compatible class

import vtkmodules.numpy_interface.algorithms as algs

rtmin = algs.min(rtdata) # wrappers around numpy algorithms

rtmax = algs.max(rtdata)

rtnorm = (rtdata - rtmin) / (rtmax - rtmin)

image.PointData.append(rtnorm, 'RTData - normalized')

This module also handles composite data and provides the same interface:

from vtkmodules.vtkCommonDataModel import vtkMultiBlockDataSet

mb = vtkMultiBlockDataSet()

mb.SetBlock(0, image.VTKObject)

mb.SetBlock(1, image.VTKObject)

cds = dsa.WrapDataObject(mb)

rtdatac = cds.PointData['RTData'] # VTKCompositeDataArray which manages multiple arrays under the covers

rtmin = algs.min(rtdatac)

This interface goes beyond simply providing numpy access. It supports:

- Single datasets,

- Composite datasets and arrays,

- Algorithms that works over all of the data model and in distributed parallel.

These are used by ParaView’s python algorithms and filters.

IMO this is a fairly good interface and is pretty rich in functionality. However, it is not easy to discover, is not well integrated and could be more pythonic in the API.

In order to achieve these goals, David Gobbi and I made some changes to VTK wrapping. These enable one to override VTK wrapped classes with Python subclasses like this:

from vtkmodules.vtkCommonCore import vtkPoints

@vtkPoints.override

class vtkPointsCustom(vtkPoints):

pass

p = vtkPoints() # p is actually a vtkPointsCustom instance

With this functionality in place (already merged to master, see this MR), we can take the existing functionality further:

from vtkmodules.vtkImagingCore import vtkRTAnalyticSource

import datamodel

rt = vtkRTAnalyticSource()

rt.Update()

image = rt.GetOutput() # image is actually datamode.Image which has numpy accessors

rtdata2 = image.point_data["RTData"] * 2

image.point_data["rtdata2"] = rtdata2

We can also do things like this:

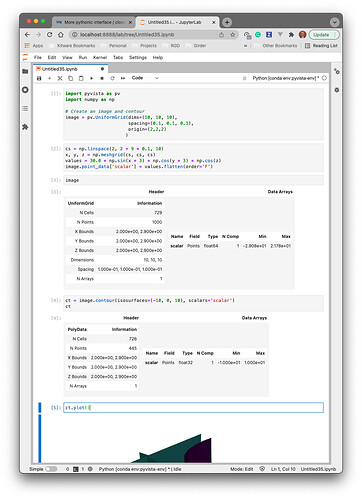

image = datamodel.ImageData(dimensions=(10, 10, 10), spacing=(0.1, 0.1, 0.1), origin=(2,2,2))

cs = numpy.linspace(2, 2 + 9 * 0.1, 10)

x, y, z = numpy.meshgrimage(cs, cs, cs)

values = 30.0*numpy.sin(x*3)*numpy.cos(y*3)*numpy.cos(z)

image.point_data['scalar'] = values.flatten(order='F')

Note that ideally, the constructor would be vtkImageData not ImageData. This needs changes to the wrappers, which we could do in the future.

The prototype that I provided supports vtkImageData, vtkPartitionedDataSet (including iterating over partitions) and arrays/attributes. Extending it to all of VTK’s data model is fairly easy since the dataset_adapter module handles most of the complications.

So what do you think?

). If not, I am open to suggestions on where to reach out.

). If not, I am open to suggestions on where to reach out.