@jaswantp We have a fix, written by Daniel, who works at our company.

We strongly suggest you put this in VTK because this issue is a show-stopper for anyone who uses parallel projection for large test cases (e. g. everyone in aerospace).

We would appreciate any feedback you have on Daniel’s code.

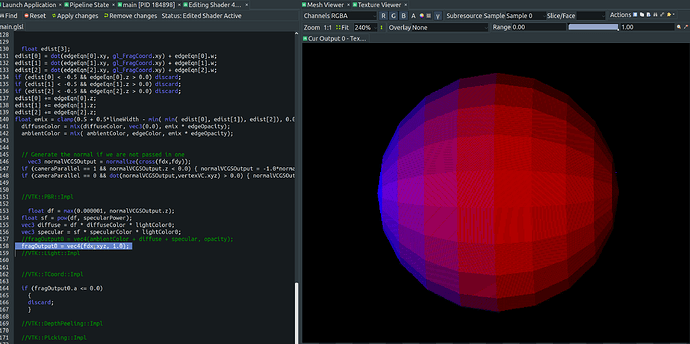

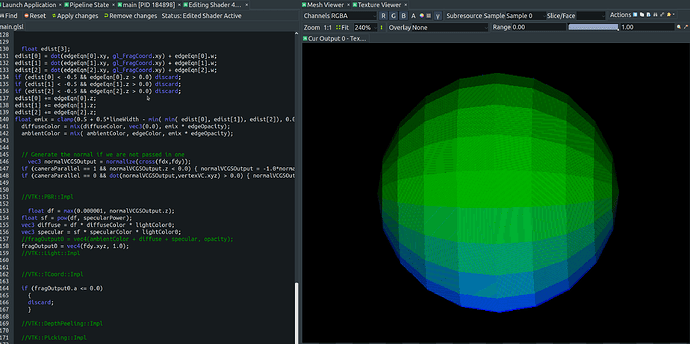

The default in the code has a line commented out, so you can see when running that one cone has the Moire patterns. You can uncomment that line, so all three actors do not have the Moire patterns.

// This is a demonstration of Daniel's fix for the Moire problem.

// There are two cones with their tips close together. Zoom in on the gap to see the small sphere.

#include "vtkVersion.h"

#if VTK_MAJOR_VERSION > 6

#include "vtkAutoInit.h"

VTK_MODULE_INIT(vtkRenderingOpenGL2);

VTK_MODULE_INIT(vtkRenderingFreeType);

VTK_MODULE_INIT(vtkInteractionStyle);

#endif

#include <vtkActor.h>

#include <vtkCamera.h>

#include <vtkDataSetMapper.h>

#include <vtkNew.h>

#include <vtkPolyData.h>

#include <vtkPolyDataMapper.h>

#include <vtkProperty.h>

#include <vtkRenderWindow.h>

#include <vtkRenderWindowInteractor.h>

#include <vtkRenderer.h>

#include <vtkSmartPointer.h>

#include <vtkSphereSource.h>

#include <vtkConeSource.h>

#include <vtkOpenGLActor.h>

#include <vtkShaderProperty.h>

#include <vtkOpenGLProperty.h>

#include <vtkOpenGLShaderProperty.h>

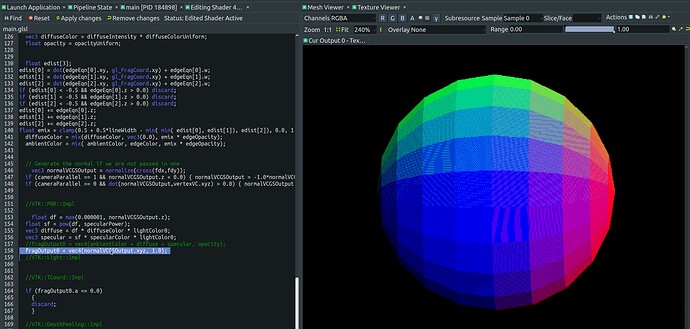

// Surface normals, thanks to Daniel!

void toggleMoireStripFix(bool active, vtkActor* actor) {

if (actor == NULL) { return; }

#if VTK_MAJOR_VERSION >= 9

// do not apply shader replacements for edge or vertex data. This destroys their ability to be

// seen by the user. These shader overrides are strictly for polygonal surface data! -dc 08/08/24

vtkOpenGLProperty* gl_prop = (vtkOpenGLProperty*) actor->GetProperty();

if (gl_prop == NULL) { return; }

vtkShaderProperty* shader_prop = actor->GetShaderProperty();

if (shader_prop == NULL) { return; }

// we want to disable our overrides:

if (!active) {

shader_prop->ClearAllShaderReplacements();

return;

}

// we need the geometry shader to be primed. This line is

// REQUIRED in order to get VTK to pass vertex data into the

// geometry shader. THIS COMES WITH OVERHEAD BUT WE WERE ALREADY

// SUFFERING FROM IT 90% OF THE TIME (mesh geometry with edges drawn)

// -dc @ 1:23AM -08/08/24 i have consumed too much coffee

gl_prop->SetEdgeVisibility(true);

// implement a default geometry shader for poly geometry:

shader_prop->AddGeometryShaderReplacement(

"//VTK::Normal::Dec",

true,

"//VTK::Normal::Dec\n"

"out vec3 normalVCGSOutput;\n",

false

);

// we no longer need to pass any model space vertices into the geometry shader,

// nor do we need to multiply normals via the inveres transpose of the model

// matrix + view matrix. This saves a lot of muls, stores, and adds. :)

// If we transform vertices and assume view space we take

// the triangle's cross and normalize:

shader_prop->AddGeometryShaderReplacement(

"//VTK::Normal::Start",

true,

"//VTK::Normal::Start\n"

"vec3 p0 = vertexVCVSOutput[0].xyz - vertexVCVSOutput[1].xyz;\n"

"vec3 p1 = vertexVCVSOutput[2].xyz - vertexVCVSOutput[0].xyz;\n"

"normalVCGSOutput = normalize(cross(p1, p0));\n",

false

);

// forward our normal computation to the fragment stage of the pipeline:

shader_prop->AddFragmentShaderReplacement(

"//VTK::PositionVC::Dec",

true,

"in vec4 vertexVCGSOutput;\n"

"in vec3 normalVCGSOutput;\n",

false

);

// replace with actual normal computation from geometry shader:

// DO NOT. I REPEAT. DO NOT RENAME normalVCVSOutput. THIS IS

// USED BY VTK DURING ITS VARIOUS PIPELINE STAGES -dc @ 08/08/24

shader_prop->AddFragmentShaderReplacement(

"//VTK::Normal::Impl",

true,

"vec3 normalVCVSOutput = normalVCGSOutput;\n"

"if (cameraParallel == 1 && normalVCVSOutput.z < 0.0) { normalVCVSOutput = -1.0*normalVCVSOutput; }\n"

"if (cameraParallel == 0 && dot(normalVCVSOutput, vertexVC.xyz) > 0.0) { normalVCVSOutput = -1.0*normalVCVSOutput; }\n",

false

);

shader_prop->Modified();

gl_prop->Modified();

#endif

}

int main(int argc, char* argv[])

{

// Create a sphere

vtkNew<vtkSphereSource> sphereSource;

sphereSource->SetThetaResolution(20);

sphereSource->SetPhiResolution(11);

sphereSource->SetRadius(0.00004);

const double coneCenterParam = 0.45;

const double coneLengthParam = 0.4498;

const double coneRadius = 0.25;

// Create cone number 1

vtkNew<vtkConeSource> cone1Source;

cone1Source->SetHeight(2.0 * coneLengthParam);

cone1Source->SetRadius(coneRadius);

cone1Source->SetCenter(0.0, 0.0, coneCenterParam);

cone1Source->SetDirection(0.0, 0.0, -1.0);

cone1Source->Update();

// Create cone number 2

vtkNew<vtkConeSource> cone2Source;

cone2Source->SetHeight(2.0 * coneLengthParam);

cone2Source->SetRadius(coneRadius);

cone2Source->SetCenter(0.0, 0.0, -coneCenterParam);

cone2Source->SetDirection(0.0, 0.0, 1.0);

cone2Source->Update();

vtkNew<vtkDataSetMapper> sphereMapper;

sphereMapper->SetInputConnection(sphereSource->GetOutputPort());

vtkNew<vtkDataSetMapper> cone1Mapper;

cone1Mapper->SetInputData(cone1Source->GetOutput());

vtkNew<vtkDataSetMapper> cone2Mapper;

cone2Mapper->SetInputData(cone2Source->GetOutput());

vtkNew<vtkActor> sphereActor;

sphereActor->SetMapper(sphereMapper);

sphereActor->GetProperty()->SetInterpolationToFlat();

sphereActor->GetProperty()->EdgeVisibilityOn();

toggleMoireStripFix(true, sphereActor);

vtkNew<vtkActor> cone1Actor;

cone1Actor->SetMapper(cone1Mapper);

cone1Actor->GetProperty()->SetInterpolationToFlat();

cone1Actor->GetProperty()->EdgeVisibilityOn();

toggleMoireStripFix(true, cone1Actor);

vtkNew<vtkActor> cone2Actor;

cone2Actor->SetMapper(cone2Mapper);

cone2Actor->GetProperty()->SetInterpolationToFlat();

cone2Actor->GetProperty()->EdgeVisibilityOn();

// With the next line commented out, you can see when running that one cone has the Moire patterns

// toggleMoireStripFix(true, cone2Actor);

vtkNew<vtkRenderer> renderer;

renderer->GetActiveCamera()->ParallelProjectionOn();

vtkNew<vtkRenderWindow> renderWindow;

renderWindow->AddRenderer(renderer);

renderWindow->SetSize(640, 480);

vtkNew<vtkRenderWindowInteractor> interactor;

interactor->SetRenderWindow(renderWindow);

renderer->AddActor(sphereActor);

renderer->AddActor(cone1Actor);

renderer->AddActor(cone2Actor);

renderer->ResetCameraClippingRange();

renderWindow->SetWindowName("Demo");

renderWindow->Render();

interactor->Start();

return EXIT_SUCCESS;

}