I am considering parallelizing the heavy part of finding the closest point and introducing line-search to speed-up vtkIterativeClosestPointTransform. Has this been done before - or any thoughts on this?

“…parallelizing the heavy part of finding the closest point and introducing line-search to speed-up…” Can you provide references, more details to describe the approach?

I am working on it right now - just the parallization. I will see if I can find some good (non-propriarity) references and provide the details about speeding up the algorithm. Another thing that I am also looking into is to possibility to provide a noise dustribution. During the week I will find some good references.

Some time next week, I will make a small merge-request with a test for the current vtkIterativeClosestPointTransform, it is not covered by any tests. It will also help others who are used to work with a reference and a moving point cloud, rather than source and target. I primarily reached in case anybody had looked into this earlier or perhaps knew that another VTK-based open-source project had looked into this.

Our main problem with iterative closest point is not its speed. It is already fast enough for relatively large meshes. Even if the algorithm was slow for some humongous meshes, you would not need to speed up ICP for that, instead you could decimate one mesh and/or randomly drop points from the other mesh, as you can compute rigid registration very accurately from just a few thousand points.

If there is time to VTK’s ICP implementation then please don’t spend it on making the current very simple algorithm faster but make it more robust.

Basic ICP method usually does not converge on real data because it would require very accurate initialization. This initialization is not trivial if the data is noisy or we are trying to register partial meshes (e.g., because they were acquired from different viewpoints or different imaging modality). This could be fixed by using a better initialization method and/or more robust optimization technique.

I heard that mesh registration in Open3D and CloudCompare (see some usage notes here) work quite well in practice. Therefore, we would not need to invent anything new, just make some already proven methods available in VTK.

Thanks @lassoan . I am working on solving a real-time SLAM problem. Building up a dental model in real-time using 2.5D images. For the initialization, I use a trajectory for prediction.

For robust-ness, I am using a distance threshold on the correspondences using either a fixed cutoff or some simple statistics. This could be added to the VTK ICP implementation. Also, I am looking into supporting a noise model, say that we know that the error on the positions is larger in one direction. To handle this, I am providing an orientation as FieldData, since this may differ from the orientation provided with the vtkPolyData. I will look into what CloudCompare does to get some idea.

I am down to using less than 10 iterations, but it is still roughly an order-of-magnitude to slow for my real-time purposes.

After studying things a bit more, some general ideas may lead to general improvements for the VTK ICP implementation.

Thanks for reaching out

Jens

I see, for real-time applications indeed the current ICP might not be fast enough, especially if you use the full-resolution scan for registration.

Looking forward to seeing your distance threshold based outlier rejection or more sophisticated methods from Open3D etc. in VTK!

I limit the number of points and use around 500 landmarks. I have had a lot of success with sorting the signed distances and removing e.g. a quantile for both negative and positive. Alternatively, I could truncate using +/- one standard deviation. I like this better than a fixed cutoff like done in VMTK.

I will see if I can find some good references. I am currently working an ICP which can be used for meshes. The optimization is done using a Levenberg Marquardt and the cost function supports an either fixed metric (expected noise depend on an orientation) or where the error term depend on the local metric, e.g. the noise metric uses the local surface normals. It will only work for polydata if normals are used. The only little tweak is that for polydata you cannot set an orientation like you can now do for vtkImageData. Before submitting anything, I will find a good paper describing the approach - it must possible to find that.

Sorry for bringing this up again.

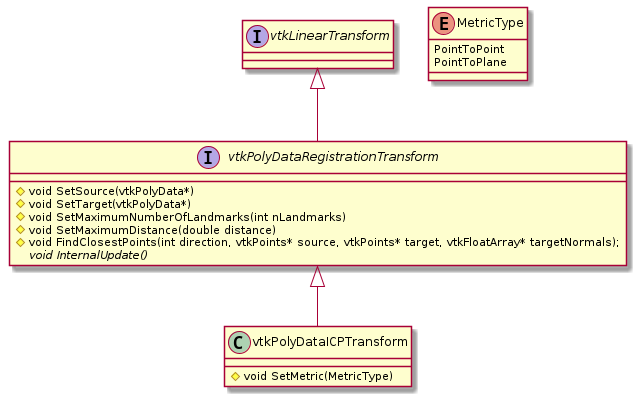

I have made the classes below for registrations of vtkPolyData. Using a point-to-plane metric (easy addition) makes the convergence about 10 times faster. Also, it is important to have a MaxDistance to threshold the correspondences found.

I am using vtkSMPTools for parallelizing the closest points found using vtkImplicitPolyDataDistance, I will off course need to use the dispatcher and change the array to vtkDataArray.

Next step is to solve the problem using Gauss-Newton. Right now, correspondences are found in VTK and the registration is made using Eigen.

It is a work-in-progress.

Article with the algorithms: https://www.robots.ox.ac.uk/~vgg/publications/2001/Fitzgibbon01c/fitzgibbon01c.pdf

In order for this to fix into VTK, I would probably need to use PCA for for finding normals in case either source or target has no normals.

The reason for the interface vtkPolyDataRegistrationTransform is because I am working with multiple registration algorithms and all of them need correspondences and it is convenient to have the calculations of those in a base class.

An alternative would be to introduce a filter with two inputs, a method for setting the current transform and this should output two point clouds with normals if it is configured for this. We could call this vtkPolyDataCorrespondenceFilter.

What are you thoughts

I have started a small open-source project demonstrating the idea, where also the point-to-plane registration is implemented.