I have two data sets: a CT volume (Dicom or Nii format) and a segmentation (Nii or Nrrd format) created in Slicer3D. I need to display both of them with correct position of the segmentation. But these two data sets have different spacing and origin. E.g. the CT volume has origin(0, 0, 0) and spacing (0.000826, 0.000826, 0.001500). And the segmentation has origin (0.160760, 0.293096, -0.813930) and spacing ( 0.001000, 0.001000, 0.001000). How can I correctly position the second data (the segmentation) set relative to the first one (the whole volume)?

I think you can use vtkImageReslice to create a new VtkImageData.

Thanks snow! I already tried vtkImageReslice and SetInformationInput() method to resample segmentation according to the volume values. But unfortunately it didn’t work. I don’t see the segmentation at all in this case

In recent Slicer Preview Releases, when you save the segmentation you have the option of enabling/disabling cropping to minimally necessary region size. If your software cannot display images in arbitrary coordinate systems then disabling cropping could be a simple workaround (because then image origin, spacing, axis directions, and extents of the segmentation will be exactly the same as the segmented volume’s).

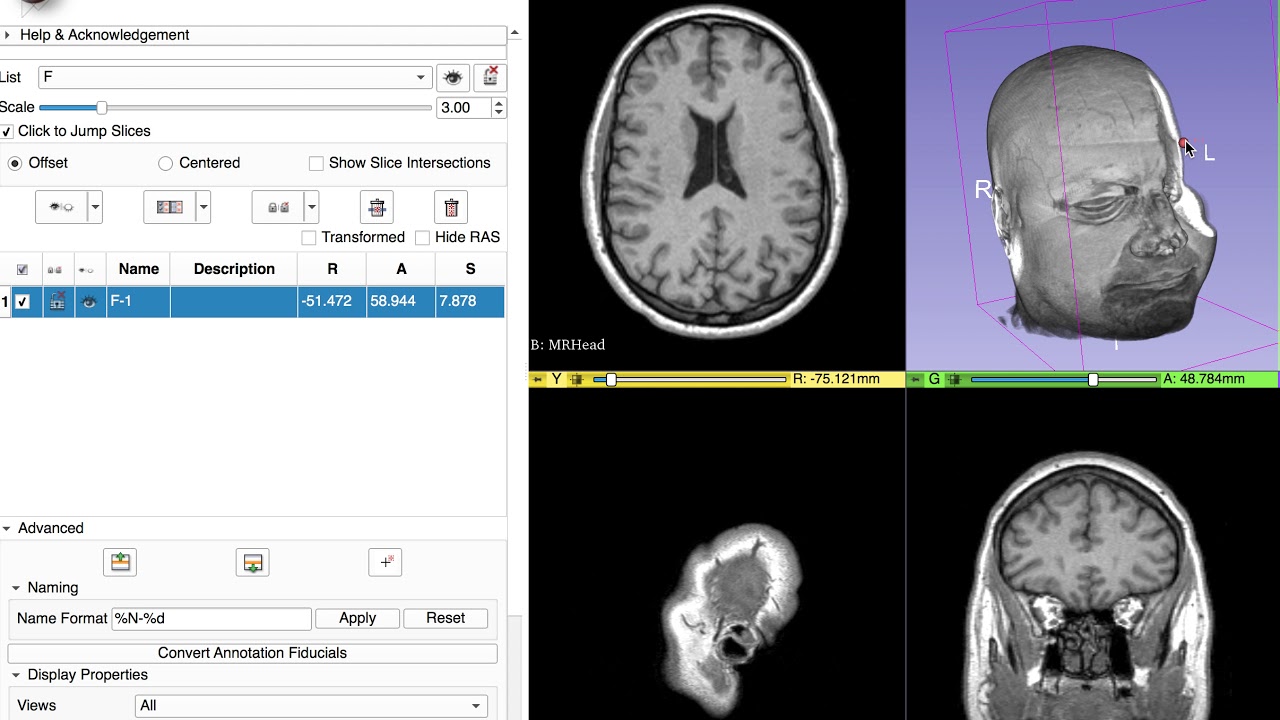

Since 3D Slicer is based on VTK, it already contains all essential medical image visualization, import/export, and analysis features, and it is highly customizable and extensible, it generally makes much more sense to implement your workflow within Slicer rather that start writing a new application from scratch. You can find training materials here and can ask help on the Slicer forum whenever you have any question.

Thanks Andras! Yes, I can display segmentations that are not cropped correctly. Or at least the Nii segmentations that are saved in RAS coordinates. The problem is with cropped segments and files that have different coordinate system. I could only find DicomToRas converter, but nothing for nii or nrrd.

All the exported files in the same (LPS) physical coordinate system. Voxel coordinate system is adjusted so that exported segmentation’s extents are minimized, if you enable cropping in Save data dialog (or you use a Slicer version that does not have cropping enable/disable option).

You could also use vtkImageChangeInformation to change the spacing and origin of one of the vtkImageData

In my case this would be incorrect. The segmentation’s extents are much smaller than the volume’s one and its origin is important to keep.

I found out that Nifti reader sets the segmentation origin to 000. As far as I understand I have to get the origin from the Qform matrix (the last column) and set it directly to vtkImageData via SetOrigin. Is this correct? Anyway my segmentation is still located incorrectly.

Nifti file format is specifically developed for neuroimaging, so only use it if you work in that field - it has hardcoded concepts, which are useful for neuroimaging, but makes it ill-suited for general use. I would recommend to use the general-purpose nrrd file format instead. VTK’s nrrd reader retrieves image origin and spacing correctly. If your images do not have rotated axes then you have all the information needed to resample the image into the same coordinate system using vtkImageReslice filter.

Note: If you want to redevelop an application from scratch to show medical images from scratch then you have to work a lot: for example, until very recently VTK did not support images with rotated axes, and probably most IO and filter classes still not fully prepared for those, so you either need to fix these in VTK or implement workarounds in your code. You also need to learn a lot: much more and much more complicated things than just resampling two volumes that have different spacing and origin. Learning would start with reading the VTK textbook several times and running and understanding techniques presented in hundreds of relevant VTK examples and tests. The application that you create from scratch most likely will remain just a case study, because most features that you can develop in a few years, you can already find in existing open-source software, such as 3D Slicer (and the very few specialized features you can implement very quickly by extending/customizing existing platforms). Learning VTK is useful, so I would encourage you to do that, regardless of trying to develop a new application from scratch, or extend an existing VTK-based software.

Thanks, Andras! I understand that there’s a huge amount of work to be done.

Just to clarify, it is only a huge amount of work if you implement an application from scratch. If you extend an existing VTK-based open-source platform then you just need to spend a couple of weeks with getting familiar with VTK, a little bit of Qt, maybe some ITK, and you are good to go: you can develop fully functional custom medical applications in weeks (instead of years), and you maintenance workload is minimal, too (because the amount of custom code that you need to develop and maintain is very small).

The problem is that I need to couple the VTK rendering pipeline with the Unity one. I’m using an open source native plugin for this, but it requires patching the VTK external rendering so that it is compatible with Unity camera. I’m not sure that I’ll not spend years patching another VTK-based platform or tool.

Do you use Unity for virtual reality?

3D Slicer’s built-in virtual reality support is quite good, too. It is based on VTK’s virtual reality implementation, but it is extended with additional features and fully integrated with Slicer’s data model and visualization pipeline. You can visualize content of the 3D scene in virtual reality by a single button click and you can move around or interact with meshes and volumes using the controllers.

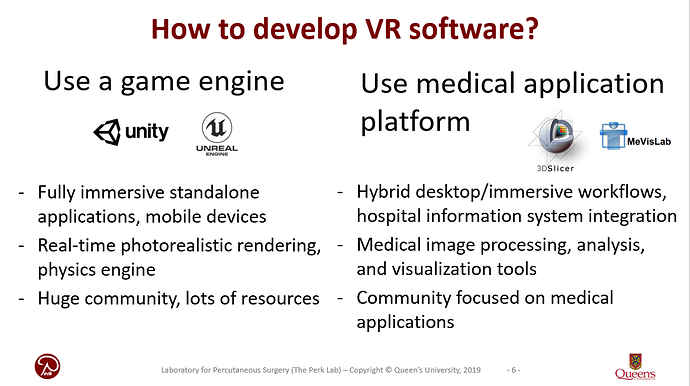

Unity is intended for gaming applications and it is really good at that (and in all game-like applications), but it is extremely limited when you consider it as a medical application platform. See this high-level comparison slide from one of my presentations at a recent conference:

Unfortunately, the Slicer’s VR extension is not enough for the project I’m working on. Except for just volume rendering I also need cutting planes which can cut at any angle and position of the controller, scene, UI, interaction, loading different volumes and segmentations in VR and much more tools in future. I expanded the original vtk native plugin and have most of this already implemented. The only trouble that appeared is volumes positioning and orientation. The data that I was given before had the same origin, extents and I assume the same orientation. And now I have another data sets (Dicom or nifti for volumes and nifti or nrrd for segmentations) and I’m stuck with their qforms and patient matrices (nrrd doesn’t have any orientation at all in 8.1 VTK). By the way I saw your commits regarding Directions in vtkImageData in the newer version of VTK, but as far as I understand they are not ready yet. Is that correct?

SlicerVR already provides cutting planes and arbitrary orientations that you can position using the controller (you can set it up by a few clicks, using VolumeResliceDriver module). We also hooked this up with custom shaders in VTK so that you can crop the volume rendering with cone or sphere that is moved by the hand controllers:

I know that for a newcomer it is not obvious how powerful Slicer’s virtual reality features are, because we haven’t spent much time with exposing them on the GUI (you need to a couple of clicks in a couple of different modules to activate a certain feature). We have been working on integrating Qt widgets display in virtual reality, which will make it easy to add GUI for commonly needed features, but we need to upgrade Slicer’s VTK to 8.9, which is planned to be done within a few months, after releasing Slicer5.

Slicer takes care of all these - it can load/save/display volumes with arbitrary orientation, by maintaining the image geometry outside of vtkImageData (in MRML volume node). After we upgrade to latest VTK, we’ll start to use image orientation stored in vtkImageData.

If you are implementing medical virtual reality applications then you are probably in a better place in the VTK/ITK/Slicer family, because our goals, problems, solutions are all very similar. In the big Unity game developer community people have very different interests and medical applications are just a very small niche.

Thanks Andras for your detailed answer! I’ll look at the MRML volume node code and Slicer’s VR extension again.

Yes, Unity itself has nothing to do with medical rendering. Though the previous version of our project was implemented via only Unity shaders and ray tracing. But we needed many features from VTK and it could take much time to implement them from scratch.

Hi @Darya_Berezniak -

Just so you know it’s not just @lassoan, I would also encourage you to try building your app on Slicer if at all possible. Not just because it’s better for all the reasons he mentioned, you also avoid black box commercial software and license fees.

As you can see if you look at the history of VTK, such open community projects often outlive and outperform the commercial competitors.

Of course Unity has some slick interfaces - send a link if you have any cool demos to inspire (and humble) the SlicerVR team.

-Steve

Thanks Steve. I’ll investigate if we can use Slicer for our goals.