I encounter a strange question, when the model runs, the memory of my computer is consumed so much, about ten times or more of the model size.

Has anyone encountered the same problem ?

Waiting for your anwser, welcome to discuss together.

This is expected if you have a long pipeline or use complex filters. You can call ReleaseDataFlagOn on your filters to reduce their memory usage (at the cost of not caching their output).

Memory consuption should be tracked and measured by using a profiler (e.g. Valgrind) or by careful code analysis if you’re not familiar with using profilers. I’ve had memory consuption issues in the past and they were all caused by memory leaks in my code, not VTK issues.

Dear lassoan, I test the method setting the “ReleaseDataFlagOn” of “vtkOBJReader” what you said today, but i think the method is invalid maybe.

The size of all the model files is 960MB, and about 10GB memory is used.

and my part code like this:

QString rootPath(“C:/work/lod/20cm_880m/obj/Data/”); //there are many model files under this directory.

QDir dir(rootPath);

foreach(auto &entry, dir.entryInfoList())

{

if(entry.isDir())

{

QString dirName = entry.baseName();

if(dirName==“” || dirName==“.” || dirName==“…”) continue;

std::string filename = QString(“%1/%2/%2.%3”).arg(rootPath).arg(dirName).arg(“obj”).toStdString().c_str();auto reader = vtkSmartPointer::New();

reader->SetFileName(filename.c_str());

reader->ReleaseDataFlagOn();

reader->Update();vtkSmartPointer mapper =

vtkSmartPointer::New();

mapper->SetInputConnection(reader->GetOutputPort());auto actor = vtkSmartPointer::New();

actor->SetMapper(mapper);auto jpegReader = vtkSmartPointer<vtkJPEGReader>::New(); jpegReader->SetFileName (QString("%1/%2/%2_0.%3").arg(rootPath).arg(dirName).arg("jpg").toStdString().c_str()); jpegReader->Update(); auto texture = vtkSmartPointer<vtkTexture>::New(); texture->SetInputConnection(jpegReader->GetOutputPort()); actor->SetTexture(texture); renderer->AddActor(actor); } }

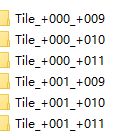

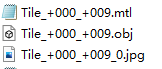

and the directory like this:

I do the same test with “vtkOBJImporter”, and the result is like this too.

I develop the same functions in the web, this question is not exist in the web.

So, do you have viewed the model with many files and about 10GB in a computer with 16GB memory ?

Thanks.

please don’t post images of code.

https://idownvotedbecau.se/imageofcode

Thank you , I have edited it.

Well, I have multimillion-cell reservoir model that is about 2GB as files (geometry + data) and it becomes a 16GB-large unstructured grid in RAM after rendering. What are you modeling, by the way?

The size of all the model files is 960MB, and about 8GB memory is used, and more about 1GB when i have interation with model.

The version 8.1, 8.2 and 9.0 of vtk have the same result in win10 system.

I have no idea to solve this problem now.

Again: what are you modeling? Perhaps its unavoidable the naïve way. Large data sets are what they are. Sometimes they won’t fit in memory, and that’s that. Large models require a bit of strategy. I have a seismic survey whose SEG-Y is 1TB. The corresponding vtkImage will never fit in memory by today’s standards. How can we visualize it? You can subsample it, upscale it, show only slices of it, use LOD, you name it.