I’m trying to do intrinsic rotation (not rotating camera!!) on a NIFTI image, but it is not working…I’ve been working on this for hours, and it is quite sad that it is still not working.

I saw the documentation regarding RotateX, RotateY, RotateZ (and WXYZ), so I used it as the transformation module as you can see in the code below.

Any help on this issue is more than appreciated!!

Thank you…

#import ants

import vtk, sys, os

from skimage.filters import threshold_otsu

output_dir = "./"

#head = output_dir + "mask-head.nii.gz"

#brain = output_dir + "mask-brain.nii.gz"

#tumor = output_dir + "mni_standard_mask_ventricle.nii.gz"

#

#head = ants.image_read(head)

#brain = ants.image_read(brain)

#tumor = ants.image_read(tumor)

#

##simplify head into binary values

#head = ants.threshold_image(head,low_thresh=threshold_otsu(head.numpy()))

#

##merge in ascending order of visibility: head -> brain -> ventricle

#head[brain!=0] = 2

#head[tumor!=0] = 3

#

##save

#ants.image_write(head,output_dir + "mask-merged.nii.gz")

#find euler

euler = (45,90,45)

#load to vtk

ren = vtk.vtkRenderer()

renWin = vtk.vtkRenderWindow()

renWin.AddRenderer(ren)

reader = vtk.vtkNIFTIImageReader()

reader.SetFileName(output_dir + "mask-merged.nii.gz")

reader.Update()

imageData = reader.GetOutput()

#marching cubes

MARCHING_CUBES_THRESHOLD = 0.01

fltMarching_whole = vtk.vtkMarchingCubes()

fltMarching_whole.SetInputData(imageData)

fltMarching_whole.ComputeScalarsOff()

fltMarching_whole.ComputeGradientsOff()

fltMarching_whole.ComputeNormalsOn()

fltMarching_whole.SetNumberOfContours(1)

fltMarching_whole.SetValue(0, MARCHING_CUBES_THRESHOLD)

fltMarching_whole.Update()

#finding centerpoint

centerFilter = vtk.vtkCenterOfMass()

centerFilter.SetInputConnection(fltMarching_whole.GetOutputPort())

centerFilter.SetUseScalarsAsWeights(False)

centerFilter.Update()

center = centerFilter.GetCenter()

#print(center)

#finding transformation formula

transform = vtk.vtkTransform()

transform.PostMultiply()

#translating to center

transform.Translate(-center[0], -center[1], -center[2])

#rotations in operating view order (patient not in operating view position yet)

transform.RotateZ(euler[0])

transform.RotateX(euler[1])

#transform.RotateY(euler[2])

#now doing some stuff...

imageScalars = imageData.GetPointData().GetScalars()

iMin, iMax = imageScalars.GetValueRange()

for i in range(1, int(iMax) + 1):

imageData_i = vtk.vtkImageData()

imageData_i.DeepCopy(imageData)

thresh = vtk.vtkImageThreshold()

thresh.SetInputData(imageData_i)

thresh.ThresholdBetween(i,i)

thresh.ReplaceInOn()

thresh.ReplaceOutOn()

thresh.SetInValue(i)

thresh.SetOutValue(0)

thresh.Update()

iMin, iMax = thresh.GetOutput().GetPointData().GetScalars().GetValueRange()

fltMarching_i = vtk.vtkMarchingCubes(); #***** Discrete version instead?

fltMarching_i.SetInputData(thresh.GetOutput())

fltMarching_i.ComputeScalarsOff()

fltMarching_i.ComputeGradientsOff()

fltMarching_i.ComputeNormalsOn()

fltMarching_i.SetNumberOfContours(1)

fltMarching_i.SetValue(0, MARCHING_CUBES_THRESHOLD)

fltMarching_i.Update()

# #**************** SMOOTHING

smoother_i = vtk.vtkWindowedSincPolyDataFilter()

smoother_i.SetInputConnection(fltMarching_i.GetOutputPort())

smoother_i.SetNumberOfIterations(50)

smoother_i.BoundarySmoothingOff();

smoother_i.FeatureEdgeSmoothingOff();

smoother_i.SetFeatureAngle(45) #120);

smoother_i.SetPassBand(0.1) #0.001);

smoother_i.NonManifoldSmoothingOn();

smoother_i.NormalizeCoordinatesOn();

smoother_i.Update();

normals_i = vtk.vtkPolyDataNormals()

normals_i.SetInputConnection(smoother_i.GetOutputPort())

normals_i.FlipNormalsOn()

#**************** CENTERING

transFilter_i = vtk.vtkTransformFilter()

transFilter_i.SetInputConnection(normals_i.GetOutputPort())

transFilter_i.SetTransform(transform)

# ********** Create the mapper and actor, add with a specific color

franMapper_i = vtk.vtkPolyDataMapper()

franMapper_i.SetInputConnection(transFilter_i.GetOutputPort()) #normals_i

franMapper_i.SetScalarModeToUseCellData()

franMapper_i.ScalarVisibilityOn()

franMapper_i.Update()

# ********* Add the actors to the renderer, set the background and size

franActor_i = vtk.vtkActor()

franActor_i.SetMapper(franMapper_i)

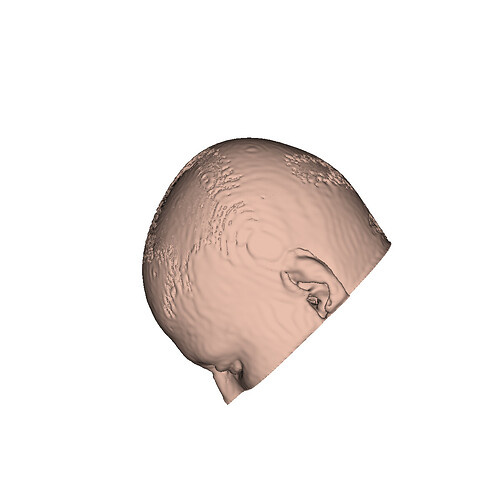

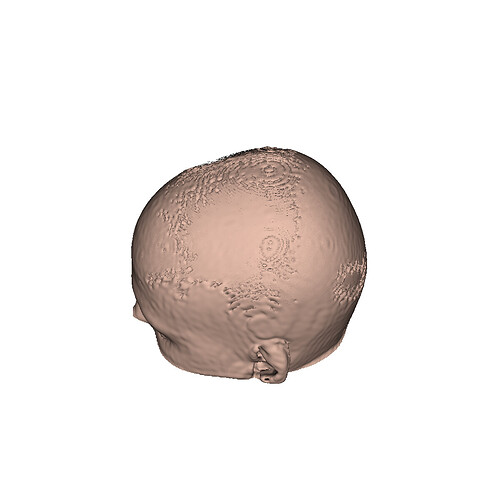

if i == 1: #head

franActor_i.GetProperty().SetColor(232/255,190/255,172/255)

elif i == 2: #brain

franActor_i.GetProperty().SetColor(232/255,160/255,132/255)

elif i == 3: #tumor

franActor_i.GetProperty().SetColor(1,0,0)

ren.AddActor(franActor_i)

#setting up camera

cam1 = vtk.vtkCamera()

(centerx,centery,centerz) = reader.GetOutput().GetCenter()

(xm,xM,ym,yM,zm,zM) = reader.GetExecutive().GetWholeExtent(reader.GetOutputInformation(0))

cam1.SetPosition(0, 0, zM*4.5)

cam1.SetFocalPoint(0,0,0)

cam1.SetViewAngle(30.0)

#cam1.Elevation(90.0) for patient / -90.0 is for local sample (not patient)

cam1.Elevation(90.0)

ren.SetActiveCamera(cam1)

ren.SetBackground(1.0, 1.0, 1.0)

renWin.SetSize(1200, 1200)

renWin.Render()

# **************** screenshot code:

w2if = vtk.vtkWindowToImageFilter()

w2if.SetInput(renWin)

w2if.Update()

writer = vtk.vtkPNGWriter()

writer.SetFileName("screenshot_test2.png")

writer.SetInputData(w2if.GetOutput())

writer.Write()