Dear repliers, thank you for taking the time to help me.

@ Sankesh:

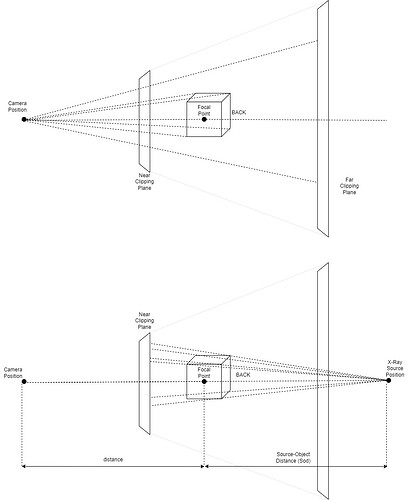

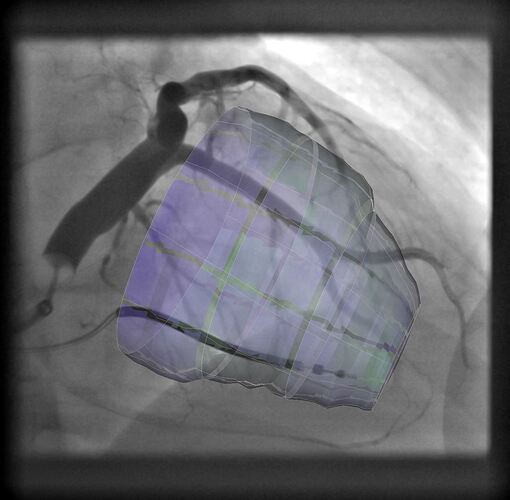

Thanks for your reply. Setting the explicit projection transform matrix is exactly what what I’ve been trying up until now. I am able to set it and it has an effect on the projection. However, the devil is in the details of coming up with the right contents of the projection transform matrix. I am afraid this is because vtk uses openGL, DirectX or some other hardware interfacing implementation to perform the perspective divide, but I am not yet sure.

@David:

Thanks for your suggestions. Reversing the camera would solve the projection problem, but it would also flip our polydata and it would place the vertices in the back in front of those in the back. I looked around, but I could not find a way to reverse the depth buffer checks. If you have solved this problem in the past, I would be very curious about the actual implementation.

@Andras:

Thank you for your reply. I am not quite sure if I understand what you mean by rendering the front and back surfaces differently. If I understand correctly, I would expect that all vertices are projected using the same projection matrix?

I tried inverting the sign of the value(s) in the projection matrix, like you suggested, but unfortunately this does not work. Is this the value copying the z-value into the w-component?

if we have a matrix

0,0 0,1 0,2 0,3

1,0 1,1 1,2 1,3

2,0 2,1 2,2 2,3

3,0 3,1 3,2 3,3

I tried flipping the sign of 2,2 2,3 and 3,2 separately.

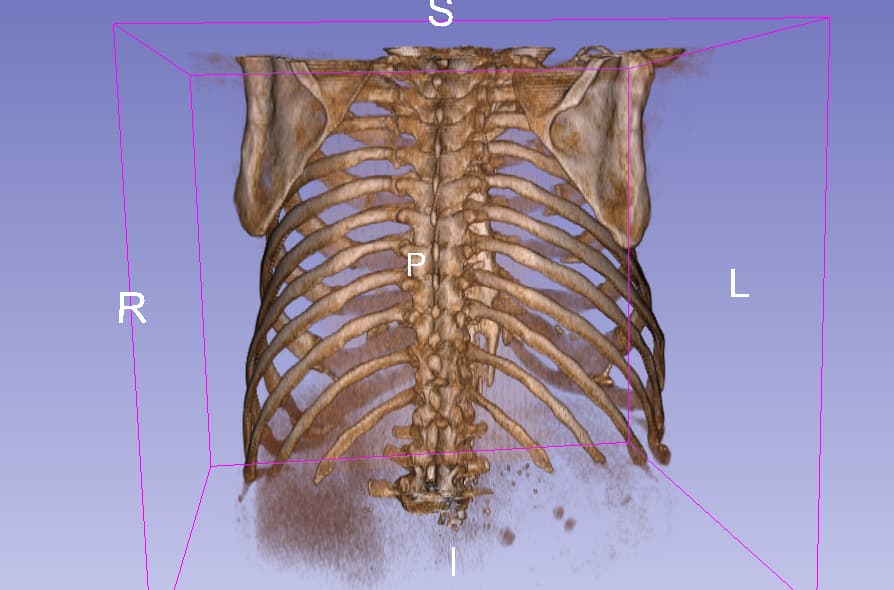

Below are the printed vtkCamera matrices from a standard perspective projection matrix and an parallel/orthographic projection matrix, with identical position, view up and focal point:

standard:

2.09928 0 0 0

0 3.73205 0 0

0 0 -1.91509 -1

0 0 -468.303 0

parallel:

0.00688308 0 0 0

0 0.0122366 0 0

0 0 -0.00569628 0

0 0 -1.91509 1

Looking at these I would expect that value 2,3 is the z-to-w component copy value. Based on my (obviously) basic linear algebra knowledge and these sources:

https://www.scratchapixel.com/lessons/3d-basic-rendering/perspective-and-orthographic-projection-matrix/opengl-perspective-projection-matrix.html

I suspect that he actual perspective divide is happening on the shader. I also suspect that what I try to achieve requires a perspective multiply