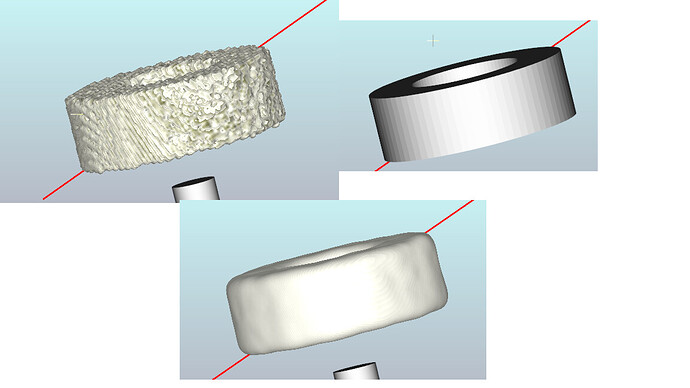

I am trying to get an imagedata from polydata but I am getting boundary voxels as cubes… applying vtkImageGaussianSmooth filter gives a smooth output but affects accuracy … is there another way?

This is exactly how it should work. A bandwidth-limited continuous signal (such as a 3D surface) can be reconstructed flawlessly from discrete samples (such as measurments at voxel values). Shannon-Nyquist sampling criteria specifies the minimum sampling frequency (pixel spacing) to be able to reconstruct a surface up to a certain spatial frequency (surface smoothness).

In practice, it means that you have to choose small enough voxel size so that all the details that are relevant for you can be preserved in the labelmap (and the surface that is reconstructed from it).

The most common application is surface editing. Many surface editing operations that are almost impossible to implement correctly on a polygonal mesh (such as mesh subtraction or shell generation) are trivial to do on labelmap representation. However, there is still a price to pay: if you need to preserve extremely small details and sharp edges then you will have to have lots of memory and computation power to deal with very large labelmaps.

Thanks!

Hey, guys. Would you mind showing a example code snippet of doing this amazing filtering work?

I am having trouble smoothing a 3-Dimentional Polydata with vtkImageGaussianSmooth, but it always give me an empty nothing. (PS: I already tried vtkSmoothPolyDataFilter, it gives me a relatively smoothed actor and I don’t like it.)

Here is “How I applied vtkImageGaussianSmooth”:

double gaussianRadius = 1.5;

double gaussianStandardDeviation = 0.8;

vtkSmartPointer<vtkImageGaussianSmooth> gaussian = vtkSmartPointer<vtkImageGaussianSmooth>::New();

gaussian->SetDimensionality(3);

gaussian->SetInputData(MYPOLYDATA);

gaussian->SetStandardDeviations(gaussianStandardDeviation,

gaussianStandardDeviation,

gaussianStandardDeviation);

gaussian->SetRadiusFactor(gaussianRadius);

gaussian->Update();

vtkSmartPointer<vtkPolyDataMapper> mapper = vtkSmartPointer<vtkPolyDataMapper>::New();

mapper->SetInputConnection(gaussian->GetOutputPort());

vtkSmartPointer<vtkActor> copyactor = vtkSmartPointer<vtkActor>::New();

copyactor->SetMapper(mapper);

copyactor->GetProperty()->SetColor(0.67,0.32,0);

copyactor->SetVisibility(true);

m_Renderer->AddActor(copyactor);You need to convert the image to polydata (using flying edges, marching cubes, or contour filter) and set that as input of the polydata mapper.

Thanks for reply! I am trying to use marching cubes now.

Here is my basic situation: I have an aorta Actor rendered in mainwindow, and I copy its polydata and create actor into another renderer, and I already tried it successfully which means I have A usable polydata already.

Here is my code now, it still gives me empty:

auto gaussianFilter = vtkSmartPointer<vtkImageGaussianSmooth>::New();

auto stripperFilter = vtkSmartPointer<vtkStripper>::New();

auto marchingCubesFilter = vtkSmartPointer<vtkMarchingCubes>::New();

marchingCubesFilter->ComputeScalarsOff();

marchingCubesFilter->ComputeGradientsOff();

marchingCubesFilter->SetNumberOfContours(1);

marchingCubesFilter->SetValue(0,0.0f);

gaussianFilter->SetInputData(MyCopiedPolyData);

float sigma = 0.8;

gaussianFilter->SetStandardDeviation(sigma, sigma, sigma);

gaussianFilter->SetRadiusFactors(1.5, 1.5, 1.5);

marchingCubesFilter->SetInputConnection(gaussianFilter->GetOutputPort());

stripperFilter->SetInputConnection(marchingCubesFilter->GetOutputPort());

vtkSmartPointer<vtkPolyDataMapper> mapper = vtkSmartPointer<vtkPolyDataMapper>::New();

mapper->SetInputConnection(stripperFilter->GetOutputPort());

vtkSmartPointer<vtkActor> copyactor = vtkSmartPointer<vtkActor>::New();

copyactor->SetMapper(mapper);

copyactor->GetProperty()->SetColor(0.67,0.32,0);

m_Renderer->AddActor(copyactor);