Is there a minimum ray length used in VTK for intersection tests? My script involves generating a point within a 3d convex hull, and firing rays of different lengths from that point in random directions (vtkOBBTree.IntersectWithLine). This methods works fine and the intersection points are successfully identified and can be visualised along the ray EXCEPT when the ray length is set to =<0.01. When ray length is equal to or less than 0.01, no intersection is ever declared, despite me being able to see in my visualisation that the ray does intersect the convex hull. This happens irrespective of the size of the convex hull.

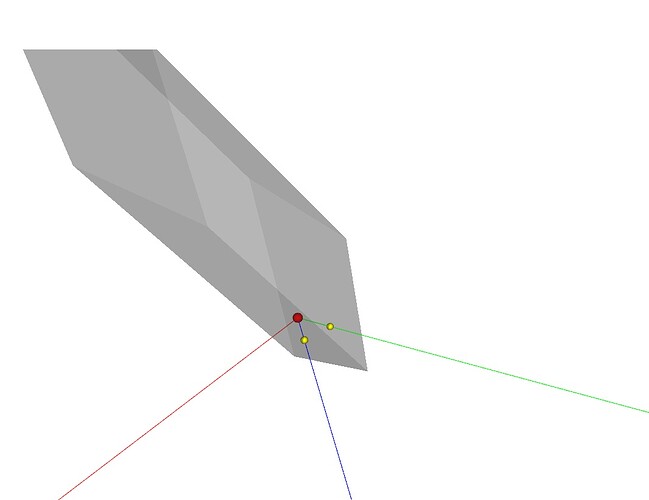

The attached image shows an example of this, where a red point within the grey convex hull has 3 rays shooting outwards from it. Along the green and blue rays, intersections have been found and show the point with a yellow marker. The red ray however has a length of 0.01 and finds no intersection despite clearly intersecting the hull.

Any ideas? Thanks in advance.