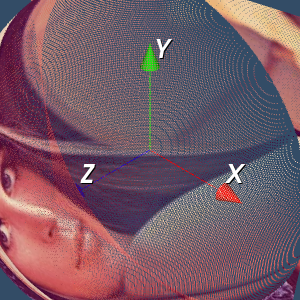

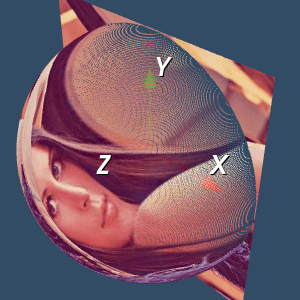

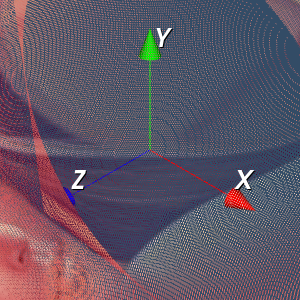

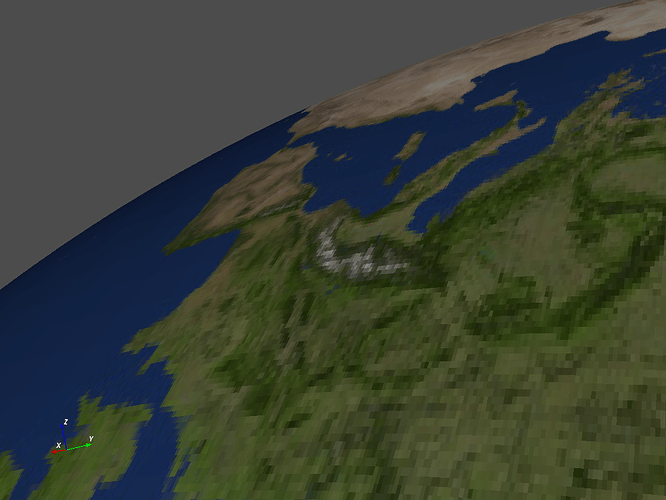

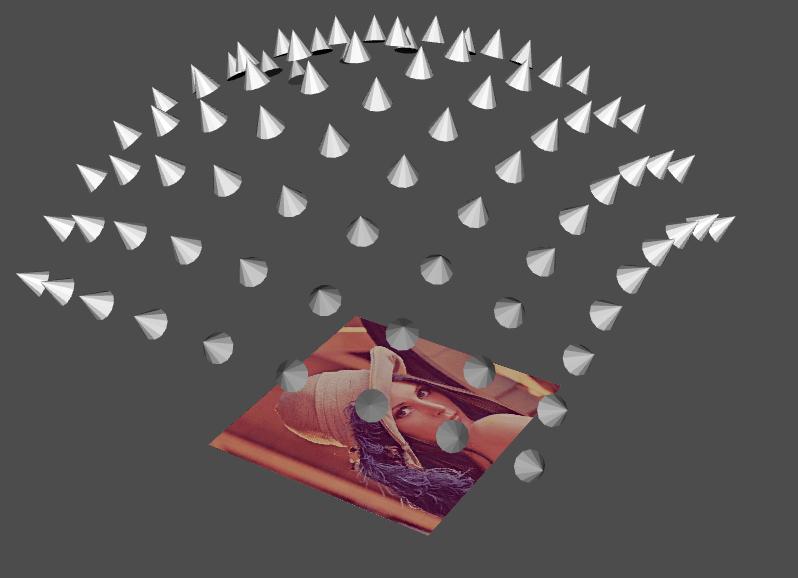

Yes sure, I have an algorithm working but not the best solution. The whole work flow is shown in the following steps:

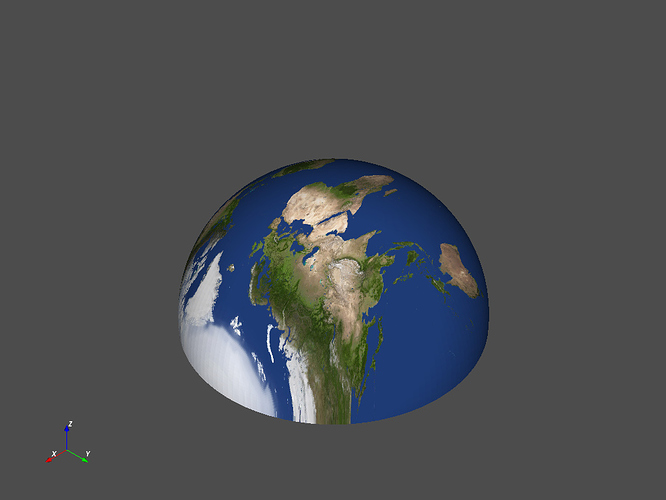

- Read a stl file (.stl) as a 3D model, called model A

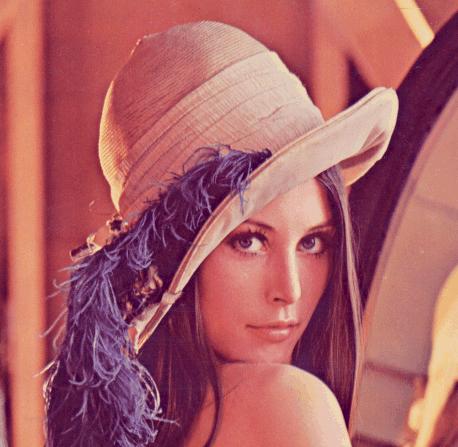

- Read a image file (.png or .jpg)

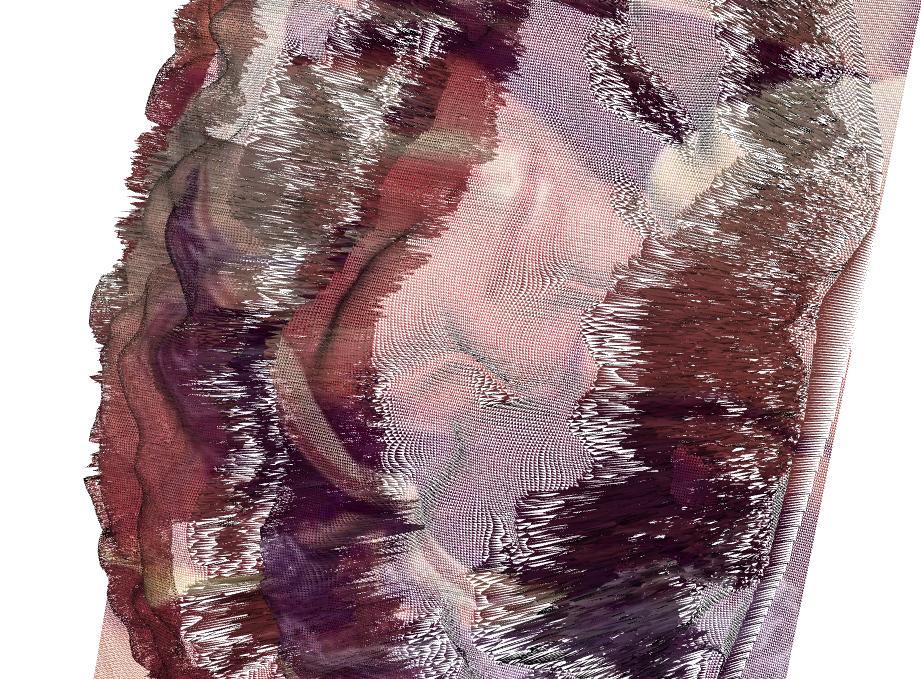

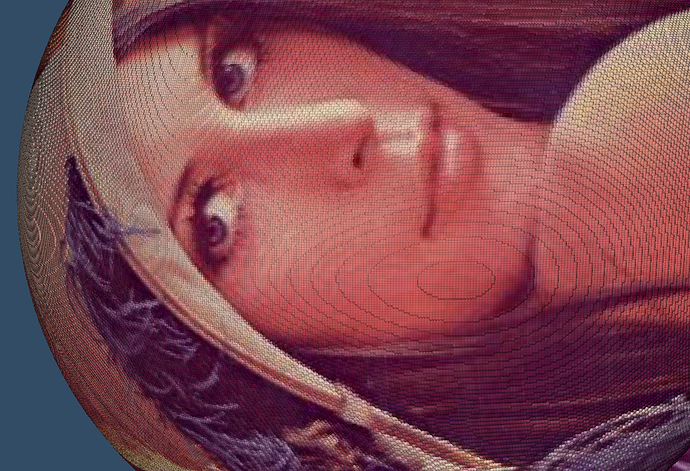

- According to the image size and the shape of the stl file, create a meshgrid for color interpolation. Thus we create a 3D model with color textured, called model B.

- For each point in the model A, assign the color vector to this point in the meshgrid with the “nearest” point found in the model B.

- Use look up table for stl color visualization.

Note: I am not allowed to attach files so I upload with the google drive link (.stl files and color.npy):

https://drive.google.com/drive/folders/1Qa9kysHJPxWXejoeTUjJYQW5-hI0vDSO?usp=sharing

The code is shown in the following. It is a little bit complicated so I only show the main function here, attached with the necessary .npy files:

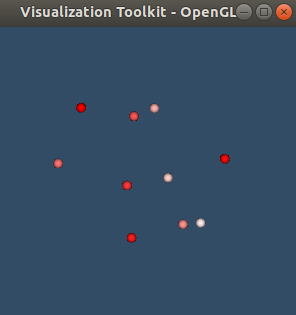

# Show the colorized stl file

# Generate the stl model – we need to first know the color vector of the objects

f = vtk.vtkSTLReader()

f.SetFileName(self.stl_path) // here, use the path of the .stl file, attached with this link

f.Update()

# Update the frame rate

obj = f.GetOutputDataObject(0)

min_z, max_z = obj.GetBounds()[4:]

lut = vtk.vtkLookupTable()

lut.SetTableRange(min_z, max_z)

lut.Build()

heights = vtk.vtkDoubleArray()

heights.SetName(“Z_Value”)

# Load the color object

Colors = vtk.vtkUnsignedCharArray()

Colors.SetNumberOfComponents(3)

Colors.SetName(“Colors”)

# Load the color stl file (N x 3 vector)

COLOR = np.load(self.colorstl_file) // here, use the path of the color.npy file, attached with this link

for i in range(obj.GetNumberOfPoints()):

z = obj.GetPoint(i)[-1]

Colors.InsertNextTuple3(COLOR[i, 0], COLOR[i, 1], COLOR[i, 2])

obj.GetPointData().SetScalars(Colors)

mapper = vtk.vtkPolyDataMapper()

mapper.SetInputDataObject(obj)

mapper.SetScalarRange(min_z, max_z)

mapper.SetLookupTable(lut)

actor = vtk.vtkActor()

actor.SetMapper(mapper)

renderer = vtk.vtkRenderer()

renderer.AddActor(actor)

renderer.SetBackground(.1, .2, .4)

renw = vtk.vtkRenderWindow()

renw.AddRenderer(renderer)

iren = vtk.vtkRenderWindowInteractor()

iren.SetRenderWindow(renw)

renw.Render()

iren.Start()

I am still looking for a better way to do that. There are several problems:

- image resolution attached with the triangulation mesh in the stl file

- I am still figuring out a way to use triangulation filter.