This issue has come up again. This time, it is very simple and clear case and it is hard to justify the error.

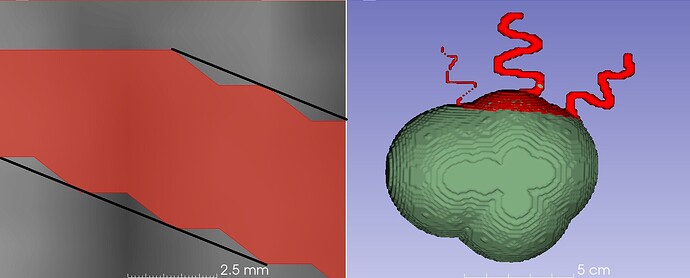

Example use case: We have a head CT, we create a binary image using thresholding to segment the bone, convert to polydata - everything looks good. However, when we apply vtkWindowedSincPolyDataFilter, the scaling of the resulting polydata can be changed by up to about 0.7% (either making the output smaller or larger, depending on the chosen number of iterations).

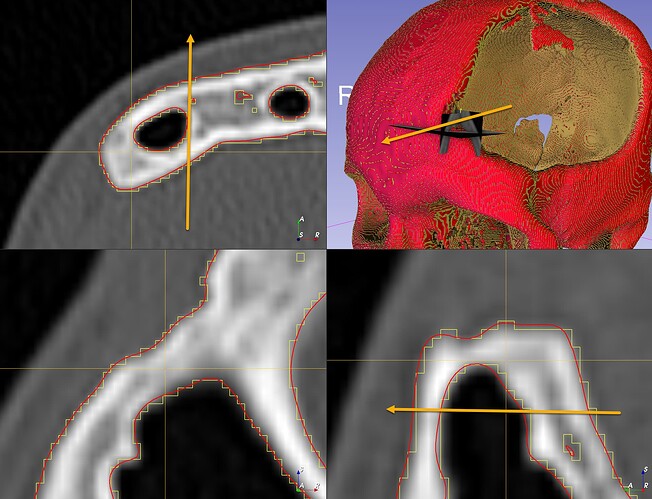

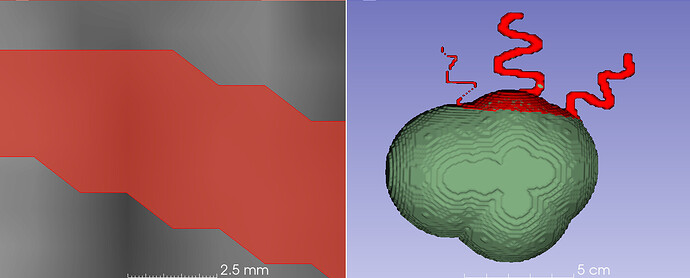

The image below shows result with 43 iterations. You can see that the smoothed red contour appears shifted towards outside in the slice views, because the output is somewhat larger. It is also clear that the smoothed red model is larger because the outer surface is all read, while inside the cavity mostly the non-smoothed yellow model is visible.

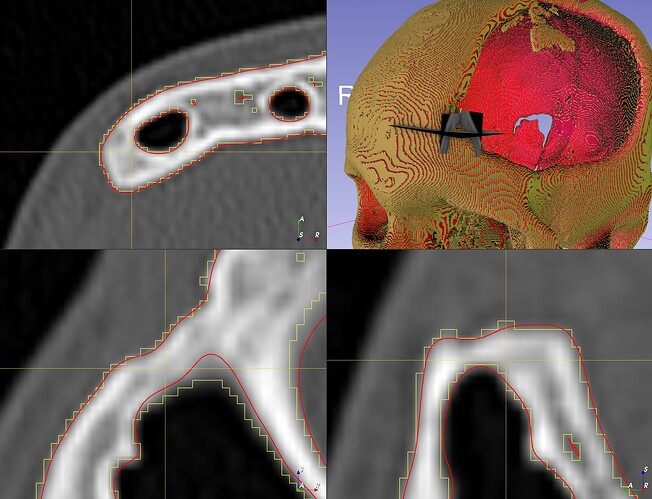

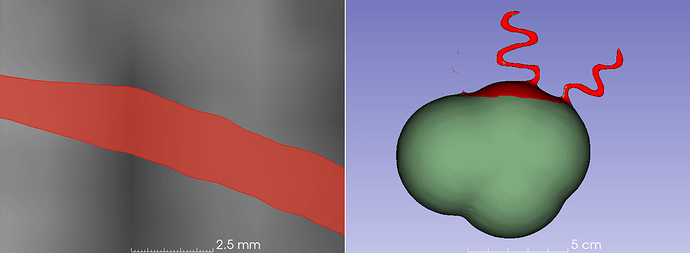

With 78 iterations, the scaled model is somewhat smaller than the input (the red model mostly appears inside):

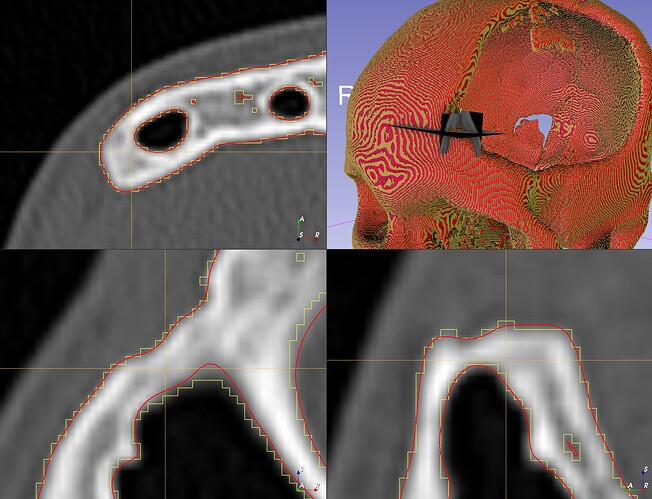

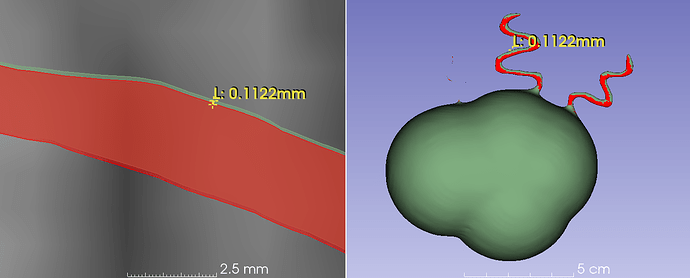

When choosing to use 60 iterations, the scaling is looks about correct - you can see that the non-smoothed yellow surface oscillates around the smoothed red contour very similarly both inside and outside:

Unfortunately, the error does not converge to 0 as the number of iterations grow, but it is periodic, so we cannot set number of iterations to a large number to avoid the error. For example, for passband value of 0.063, the period is 60 iterations. Smoothed polydata is too large when number of iterations = 43, 103, 163, …; too small when number of iterations is 78, 148, 208, … Scaling is correct between them at every half period at 30, 60, 90, … Unfortunately, the period length depends on the passband value, so we cannot just hardcode to always use 30 iterations.

This video shows the smoothing result with varying the number of iterations changing from 1 to 300:

The challenge of making the filter preserve the overall scale of the mesh is well known and there is a mechanism implemented in the filter to address it but it seems that it is not working well and I don’t see any obvious reasons why.

Test data set: https://github.com/lassoan/PublicTestingData/releases/download/data/HeadCT-threshold270-surface.vtp

Code to reproduce the problem:

# Reproduce shift by smoothing

inputModelFilename = "path/to/HeadCT-threshold270-surface-ncd.vtp"

#iterations = 43 # too large

#iterations = 60 # correct size

iterations = 78 # too small

passBand = 0.063

# Read input

inputModelReader = vtk.vtkXMLPolyDataReader()

inputModelReader.SetFileName(inputModelFilename)

inputModelReader.Update()

inputPolyData = inputModelReader.GetOutput()

# Apply smoothing

smoother = vtk.vtkWindowedSincPolyDataFilter()

smoother.SetInputData(inputPolyData)

smoother.SetPassBand(passBand)

smoother.SetNumberOfIterations(iterations)

smoother.NormalizeCoordinatesOn()

smoother.Update()

# Render results

renderWindow = vtk.vtkRenderWindow()

renderWindow.SetSize(600, 600)

ren = vtk.vtkRenderer()

renderWindow.AddRenderer(ren)

iren = vtk.vtkRenderWindowInteractor()

iren.GetInteractorStyle().SetCurrentStyleToTrackballCamera()

iren.SetRenderWindow(renderWindow)

mapperInput = vtk.vtkPolyDataMapper()

mapperInput.SetInputData(inputPolyData)

actorInput = vtk.vtkActor()

actorInput.SetMapper(mapperInput)

actorInput.GetProperty().SetColor(1, 1, 0)

ren.AddActor(actorInput)

mapper = vtk.vtkPolyDataMapper()

mapper.SetInputConnection(smoother.GetOutputPort())

actor = vtk.vtkActor()

actor.SetMapper(mapper)

actor.GetProperty().SetColor(1, 0, 0)

actor.GetProperty().BackfaceCullingOff()

ren.AddActor(actor)

iren.Initialize()

ren.GetActiveCamera().Pitch(90)

ren.ResetCamera()

ren.GetActiveCamera().Yaw(30)

ren.ResetCamera()

ren.GetActiveCamera().Zoom(1.5)

renderWindow.Render()

iren.Start()

@will.schroeder could you look into this? Many people use this filter to create 3D-printable models from images and it would be important to avoid any unnecessary systematic scaling errors (even if it is less than 1%).

It’s probably worth looking at Taubin’s original paper to see if there are any comments related to this behavior.

It’s probably worth looking at Taubin’s original paper to see if there are any comments related to this behavior.