Hello everyone,

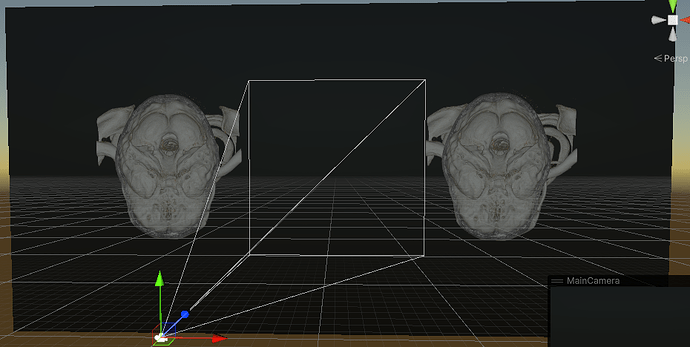

I’m trying to read the Z-buffer during volume rendering. Thanks to similar topic (Zbuffer with Volume Rendering) I know I have to switch mapper to RenderToImage mode. Currently I move frame to DirectX by pixel transfer and move to Unity with following result for stereo view:

I want now to extend texure to have in other half a depth information of volume rendering.

Fist issue is that I’m not sure if GetScalarPointer method from vtkImageData class is proper way to get pixel values.

Second issue is that currently I have two renderers (one per eye) and one volume/mapper attached to both of them and when I call GetColorImage or GetDepthImage from mapper I receive only one view.

Does anyone know if I should maybe change camera settings to have one renderer only for stereo rendering and then mapper will give me expected result or how to achieve depth stereo image result mixed with RGB one ?

PS

The purpose of my work is to send this texture to VR via Remote Rendering and also I may have a question if depth value in vtk is linear and is it an option to change a depth range from camera clipping to volume bounding box as on streaming stage I convert texture to NV12 format which is not lossless and for RGB data it’s okey however with big depth range error is too significant on client side.

Best regards,

Konrad