So I created this thread to document any progress I make on getting VTK visualization to run on the standalone VR headset the Oculus Quest 2. Currently the VR example found in the VTK.js project doesn’t work within the native Oculus browser, it does however work on Firefox’s Reality Browser.

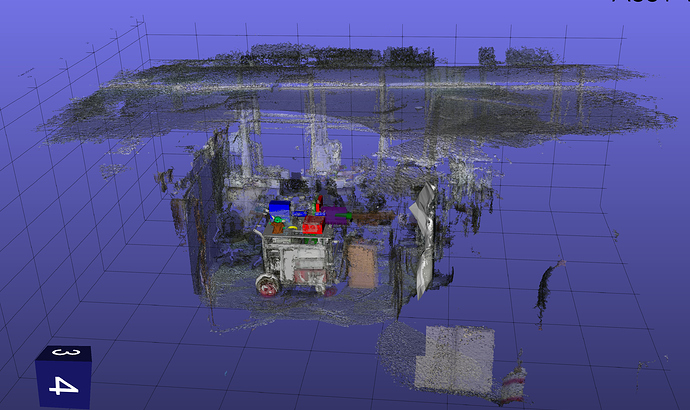

There are a few issues with the visualization, nothing major though:

- The visualization is a tad rough, anti-aliasing will likely need to be enabled.

- There’s no in-VR menu, controller models or interaction.

- Hand-tracking isn’t currently supported by the browser.

- The camera light is too narrow.

- There’s no floor for reference.

Initial work will be on getting a proper exporter working in my existing VTK program to export a complete scene, a macro for Paraview exists here that can do this however last time I checked it uses some classes not exposed to VTK (e.g. ‘from paraview import simple’). If I can figure out a VTK-native method I’ll then likely merge sections of the VTK.js SceneExplorer and VR example to get something usable for fast visualization.

I’ll then see if I can implement some interaction using the controllers, I have some existing controller models I created for a separate project that should work. Initial work will likely be button presses and maybe joystick interaction, ideally raycasting will be added eventually.

User interaction will likely be limited to the non-VR mode until I get something usable, thankfully it’s fairly easy to switch.

I have no Javascript experience so this might take a while, any help would be appreciated.