I’m currently doing a PhD in Nuclear Physics so the kinds of data I work with span a pretty wide spectrum:

-

Most of my data is discrete polydata spread across a germanium crystal volume as voxels (e.g. risetime maps, PSA deviation) in which case the glyph3D filter works really well.

-

I do electromagnetic simulations of charge distributions to create signal responses so I utilize the vtkImageData class a lot to check how the fields look within complex geometry combined with line glyphs to show particle tracks, I also slice these fields into 2D and perform contouring to check for discontinuities in the fields.

-

The core of my PhD is in the development of efficient search algorithms using topological data analysis so it’s useful to visualize several hundred thousand glyphs with weighted and directed edges which VTK does incredibly well. By linking the point IDs I can also see how the response of certain geometric positions is expressed in a multidimensional (~100D) response space.

-

I use VTK for visualizing the geometry of experimental setups for real-time control (e.g. it’s useful to see what part of the crystal is being hit by the gamma-beam without visual inspection). This is possible using VTK’s STL reader.

-

I also use it for the validation of geometry for nuclear simulation in GEANT4 and combining the produced data with CAD models (GEANT4 has an amazingly poor renderer). I wrote my own importer & exported class for GDML files but VTK is used to combine the geometry on render.

I also have gotten involved in a few other projects around the lab which utilize some of the methods described earlier:

- I wrote a 3D reconstruction software package for Iodine-131 Thyroid imaging for cancer diagnosis that combines CT and gamma-camera data for automatic segmentation.

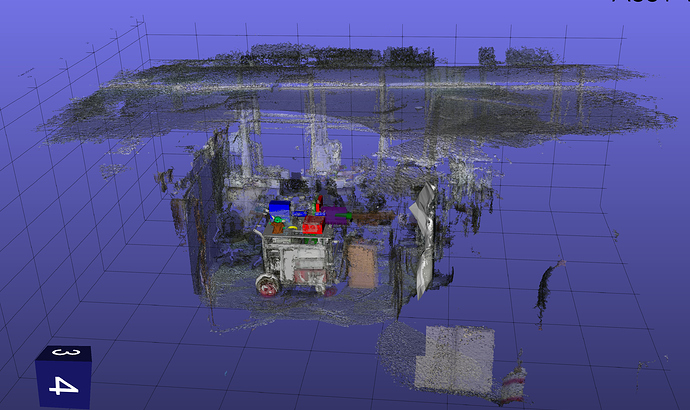

- I wrote a visualisation package for a Compton Camera that allows for the combination of LIDAR SLAM data with Compton backprojection cones and CAD models to determine the presence of nuclear waste contaminants in places like nuclear power plants.

The main feature I had in mind for this work would be a roomscale 6-DoF display of a scene at 1:1 scale that would allow for the user to walk around a scene and inspect certain actors, for example if the lab Compton Camera was utilized to scan unusual activity in a concrete wall it’d be useful for an RPS to be able to inspect the activity projection on a SLAM reconstruction of the environment before visiting the contaminant to lower the exposure to harmful radiation.

I currently have an example of this working in VTK, it combines several versions of poly and image data in the same scene.

I’ve had a cursory look at some other Javascript viewers online, a lot of them have specific usage cases (e.g point clouds, model viewing) but seem pretty capable at their specific jobs, VTK and vtk.js have the benefit of supporting multiple different data types at once, the only software I’ve found that does that online would be the ParaView Web suite however AFAIK there’s no VR support at the moment. Notable examples include VRMol for molecular visualization and Poltree for point clouds (which states it works in VR however I had no success).

A-Frame has glTF support and has probably the most VR-friendly interface so I could write a viewer in that and use the glTF exporter class like you suggest however AFAIK things like 3D image data isn’t supported by the exporter class, it also doesn’t really have the functionality to do more complicated operations like contouring & slicing.

The vtk.js example by comparison is a little simple however as it’s mainly there to demonstrate the VR camera renderer I don’t think that’s too much of an issue. I’m pretty confident that the features exposed in vtk.js should be enough to get something workable however the scene will likely be static until I can figure out an interaction scheme.

Raycasting from the controllers would go a long way to producing a viable solution for interaction, VRMol effectively dedicates an entire virtual wall for 3D text to work as a menu. This should be relatively simple to implement with a proppicker and callbacks.

So I’ve had my Oculus Quest 2 for less than a day so my findings might be a little immature, part of my motivation for posting in this forum is to establish if there’s anyone else out there interested in developments like this.