Hi,

I am trying to reslice a vtkImageData with an interactive vtkBoxWidget. Once the box is placed correctly, I pass the vtkTransform obtained from the box widget to vtkImageReslice.

My tests are with VTK 9.2.6 from conda-forge.

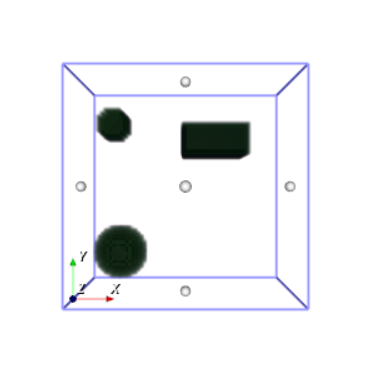

To test that I am doing things correctly I started with simply cropping a test image on the 3 axes. My test image contains a sphere (bottom left), a cylinder (top left) and a parallepiped (top right).

I try to crop the image above around the sphere. The box I configure is not a cube, but a parallelepiped, see below. My expectation is that the output will be a cropped image, i.e. the dimensions will be less in all axes.

The code below produces a visual output that I expect however, it maintains the original input extent and dimensions while changing the spacing. What I would want is to maintain the spacing and reduce the extent (dimensions), i.e. I would like to crop.

trans = vtk.vtkTransform()

box_widget.GetTransform(trans)

reslice = vtk.vtkImageReslice()

reslice.SetInterpolationModeToCubic()

reslice.SetResliceTransform(trans)

reslice.SetInputData(img)

reslice.TransformInputSamplingOn()

reslice.Update()

I have tried to change the output origin and extent of vtkImageReslice, but never got it to work as intended. To know how many voxels I should have in the output, I currently calculate the distance between parallel planes of the box.

reslice.SetOutputExtent(*extent)

reslice.SetOutputOrigin(*origs)

The important bits of my code are here CIL-work/viewer/minimal_box_widget_example.py at 8572d1a2b4a0c324df73db48e765ab429f228aba · paskino/CIL-work · GitHub

Any help is welcome.

Cheers

Edo